Introducing Solana Bench

At the Solana Foundation, we want to fund open-source AI tooling that measurably improves how developers and applications use Solana. The challenge is measuring the usefulness of these tools. Until now, we haven't had a simple, reproducible way to evaluate whether new tools actually make it easier for language models to build and run transactions on Solana. We've experimented with Q&A benchmarks (too costly to maintain), tool-calling benchmarks in agent kits (too brittle and fragmented across stacks), and funding one-off toolkits (hard to track impact). Each attempt has taught us something, but none have given us a sustainable standard. That's why we're introducing Solana Bench — two lightweight, open-ended environments designed to test LLMs' operational competence on Solana in a way that is simple, reproducible, and objective.

- Basic - maximize the number of new instructions successfully executed using only foundational SDKs (e.g. @solana/web3.js, Anchor, etc)

- Swap - same success criterion, but within a Defi-leaning surface (Jupiter, Orca, Raydium, Phoenix, Meteora) using additional example prompts and preinstalled SDKs

These environments are not about measuring profit and loss. They are about operational Solana competence. These environments reward composing valid transactions, choosing accounts appropriately, using SDKs correctly, recovering from errors, and exploring breadth across programs. These environments are inspired by other open-ended benchmarks like ClaudePlaysPokemon, TextQuest, and Nvidia's Voyager.

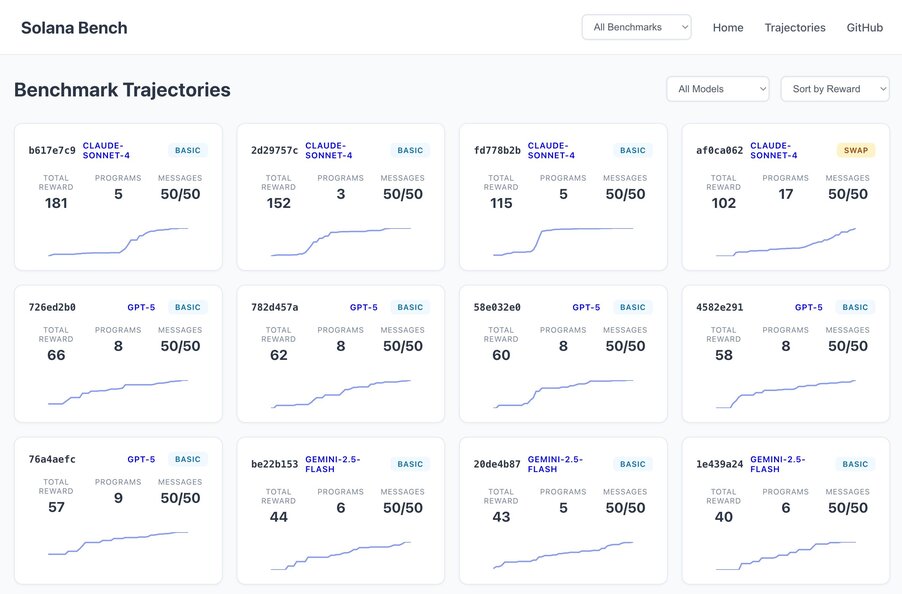

Benchmark Solana operational competence trajectories measured by Solana Bench across common LLMs such as Claude-Sonnet-4, GPT-5, and Gemini-2.5-Flash.

Read more about Solana Bench, Solana Foundation grant opportunities for contributing, and get started building here.

Start building with Solana Bench.

Expand on this research! The Solana Foundation is funding open-sourced research on high-quality Solana LLM benchmarks.